The Legal Impact: AI Impact on the Legal Industry

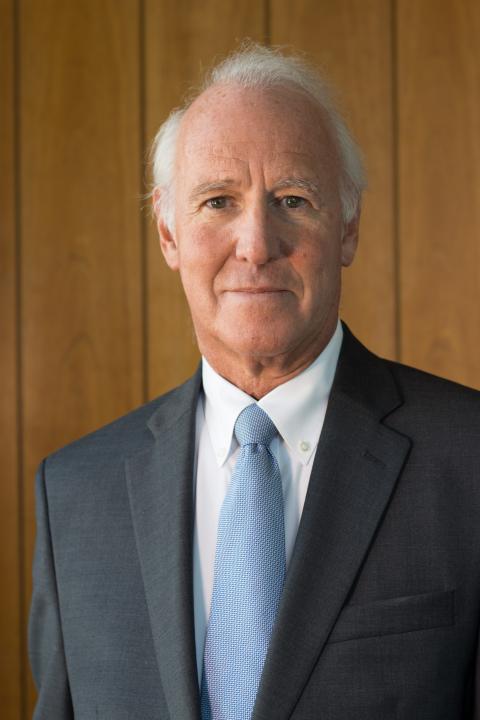

Professor Bill Murphy breaks down the potential impact of large language artificial intelligence tools, like ChatGPT, on the legal industry. Produced and Hosted by A. J. Kierstead

Forbes, "Lawyer Used ChatGPT In Court—And Cited Fake Cases. A Judge Is Considering Sanctions": https://www.forbes.com/sites/mollybohannon/2023/06/08/lawyer-used-chatgpt-in-court-and-cited-fake-cases-a-judge-is-considering-sanctions/?sh=6f2a5d957c7f

Get an email when the latest episode releases and never miss our weekly episodes by subscribing on Apple Podcast, Google Play, Stitcher, and Spotify!

UNH Franklin Pierce School of Law is now accepting applications for JD and Graduate Programs at https://law.unh.edu

Legal topics include technology, law, legal, research, writing, intellectual property, patent, trademark, academia

Read the Transcript

A. J. Kierstead: This is the Legal Impact Podcast presented by the University of New Hampshire, Franklin Pierce School of Law, now accepting applications for JD and graduate programs. Learn more and apply at law.unh.edu. Opinions discussed are solely the opinion of the faculty or host, and do not constitute legal advice or necessarily represent the official views of the University of New Hampshire, and UNH Franklin Pierce School of Law. I'm your host, A. J. Kierstead, and today I'm joined by Professor Bill Murphy. Welcome back to the show.

Bill Murphy: Thank you, A. J, it's always good to be talking with you.

A. J. Kierstead: AI obviously is all over the place. We've had some previous discussions around machine learning and AI and aspects of that, but there was, like, on the mainstream, a huge technological jump with new products over the last few months when it comes to artificial intelligence and large language models and red flags are raising with many people in white collar jobs. Like, is this gonna be tremendously impactful to the future of my field? And I thought it'd be an interesting conversation to discuss the possible impacts on artificial intelligence models like ChatGPT and such for the legal field. So, I mean, to start off with, at a high level, are products like ChatGPT and the new large language models going to have a huge impact on the legal field, do you think?

Bill Murphy: Yes and no. It's gonna have impact that we don't see and are not predicting correctly now. But the whole AI thing, this is just a beginning step, and I think some of the problems that we're seeing, and the reason this is attractive, it got opened up to a lot of people and it appears to be something it's not. So it looks like it is answering things intelligently where all it's doing is probability of words following other words. And then people say, well, it's hallucinating. No, it's not. It's looking at its database and it says it's most probable that these type of things would appear. And the prompting of these, ChatGPT in particular, but most of those models with the generative AI is really the key. If you type in something, in fact, I did this with my partner.

Bill Murphy: We typed in a prompt that said, explain how elephants evolve from horses. And it went off and wrote a whole story. It didn't know what it was writing, it was just putting things together in a story that would be like that. And it talked about how elephants evolved from horses and sighted people that were real people in evolution 'cause those people would appear in a study and say things like that. So a lot of that's just the people using it don't understand the limitations. I make it similar to the people that think they've got a car that can self-drive. Yeah, it does some stuff. It won't run into the car in front of you if you're paying attention and it's aimed right. But it's not gonna be able to drive down the highway.

Bill Murphy: It doesn't sense everything. It only senses a small part of that universe you need, if you want to be safe in that car. So that's where we are. But it's early days, and I think this is gonna be very promising. We've already had a lot of AI already helping out, like, predictive coding. Like when I started law, in Antitrust, we had a computer, but the associates, the beginning people, we had to go through every single document and then code it into the computer, sort of machine assisted manual work. Nowadays, machines will scan it and they'll do analytics of the evidence, and it'll make predictions of, well, this is good stuff, this is a good document you should look at. Rather than having the armies of low level attorneys go through that.

A. J. Kierstead: If anything, I really think for the legal field, at least for like probably one of the most impactful ways that'll be effective is the legal research into things where you're gonna be able to probably have much more efficient ways of instead of relying on very specific keywords, it's probably gonna be a little more intelligent enough to associate different things in better ways to be able to retrieve data in like, Lexus and things like that.

Bill Murphy: Well, I mean, and I think that's what's... You've got it right on, is what is the database that it's looking at? If it is looking at a database that is filled with bad law, it's gonna give you back bad law. If it is looking at a well curated legal database, then perhaps the inquiries will come back more intelligent. And I think that's where we're going. There's a company, Casetext, that has their co-counsel. I think we're gonna see more of that, already Lexus is making noises about, oh, they're gonna add, everybody's adding AI, but, I'm not sure everybody knows exactly what they're doing.

A. J. Kierstead: Yeah, I mean, even when you look at what's on the consumer market right now for things that are in beta, like when it comes to like ChatGPT is like a different thing. Like that's a great thing for writers, maybe if they're doing a first draft or something like that, or do a quick search and have it create something. But Microsoft has jumped in early with a beta model for their Bing search engine, which I think is gonna be a lot more worth, this sort of technology is gonna be useful, and like you're saying for the database.

Bill Murphy: And I think you're right, it will be eventually, but one of the things I think that Microsoft has already talked about is we're gonna provide citation, where did we get this information from? Now that brings up the other problem is, somebody probably owns that information, it's copyrighted or it's you got the the data from me and I didn't even know I gave it to you. And this is where Google has a huge advantage. They've got a massive amount of information, but a lot of it is web scraping that I'm not sure that we've really come to grips with them pulling off information that we would think is private information and they might start using.

A. J. Kierstead: Yeah. It's like a chicken and egg issue. What is the IP issue when it comes to artificial intelligence? Is it the fact it's taking it, is it the fact that these web services have publicly posted your information in a way that's easily scrapable by services like this?

Bill Murphy: Well, I mean, it's the same problem we have if, I take a great picture because I have a great camera. How much of that great picture is my camera and how much of that is me? Yes, I framed it and all this, but after I got, went into post, I can play around. Maybe it's the playing around. I think that prompt engineering is going to be a new legal job. You'll have people that are being hired for a short period of time at least, legal prompt engineers who write good prompts to get good information out of these databases. Just like people that knew how to do a good search. They knew how to do it. They didn't read everything. They knew, okay, I'll start here. Then I'll follow that lead and I'll follow that lead.

A. J. Kierstead: Yeah, and I'll put a link to this in the episode description at law.unh.edu/podcast. If you somehow didn't hear about this case, but this lawyer's article in Forbes where a lawyer was using ChatGPT to write some of his legal arguments in a case. And the hallucination effect is what it's commonly referred to. But like you said, it's bad data. It's super important, especially for many years to come with these models that you need to review absolutely everything that's going into it, especially if you don't want to risk getting disbarred if you're bringing it to court.

Bill Murphy: Well, the interesting thing is like at what point is this like supercharged Grammarly where it's checking your grammar and checking your spelling. We all use spelling checkers and check your grammar or Grammarly type things. And nobody has a problem with that. I think, though, if you're just not using it in that sense, but you're relying on it and just going, well, that must be right. There comes the problem. And that's, I think, people not understanding what it's doing. If you say, give me a case that says this, it will do that. It will give you a case. It will make one up. It will sometimes use a case name that's correct. I was talking with someone who works at the patent office, and they apparently have had one of their first instances of a lawyer turning in a patent that apparently they used ChatGPT, and the references don't match. They're real patent numbers.

A. J. Kierstead: You know what this reminds me of? Wikipedia when it first came out. This sounds exactly like Wikipedia where instead of a bunch of randos on the Internet posting things onto this new Internet's own encyclopedia, now we're using this rogue robot on the Internet that's doing the same thing.

Bill Murphy: Yeah, but how much of this is like, it starts to wear off as people know, okay, that was fun. It reminds me of Eliza. Years and years ago we had that psychiatry response system, and people thought, oh, it's giving me therapy. No, it wasn't. If you asked, if you say, oh, I'm having trouble with the family, it would just go, why are you having family troubles? And it was just reporting response that was programmed in. And the people want to have something they're talking with or it is intelligently helping them out. We are not anywhere near that yet. And as long as people understand that, if you think ChatGPT is going to give you answers that, some inventive answers, it will, but they won't be correct. You have to understand, it's just going through and the way it does it, is it looks up probabilities. When people say, oh, it's got all this bias. Yeah, because a lot of this stuff on the web is biased. So, it just pulls it out. It's not biased, it's not anything.

A. J. Kierstead: Yeah, yeah, the code doesn't have that sort of issue going on. It's whatever, it's just like any other, it's like the next level search engine. It really seems like in many ways for this, especially when we're talking the legal research side of it. This is, in theory, the brain behind the next Google sort of product of some sort, which is fascinating. It can be so revolutionary with regards to, like, how you utilize resources, and it'll be really interesting to follow going forward.

Bill Murphy: I mean, I think the real, real problem right now, immediately, for the legal profession is going to be, you can have evidence that is easily faked, and we will have trouble with our current ways of looking at it to determine that, particularly visual. Somebody says, oh, here's the video from my Ring cam, and it shows, A. J was there robbing my house yesterday. I could probably make that today online, just going out and doing that. Are we capable yet of making sure that all those deepfakes and faked evidence, faked emails don't come in?

A. J. Kierstead: It's like the Photoshop issue of when that first went mainstream turned up to 11.

Bill Murphy: Yeah, and now it's going to get worse. Now, I've seen some of the ones say that they're going to have a watermark, but it's a voluntary watermark. Well, if I'm trying to be a criminal, I'm not going to pick that voluntary watermark.

A. J. Kierstead: And other people are going to use the technology that will not put a watermark in it, too. Like, this technology is exploding quickly nowadays, and it doesn't take, with computers being so inexpensive nowadays, like, anyone could have their own server. Like, it's not like you need a huge data center in order to have your own program exist on the internet. There's this open world now of technology that's it's progressing. It hasn't slowed down over the last four years.

Bill Murphy: But people forget to create ChatGPT, OpenAI, that cost millions and millions of dollars. They had to scrape through a ton of data. It cost a lot, and you think Bitcoin consumes a lot of electricity. Generative AI consumes a ton of energy just trying to compute all those connections that it's making. Now it's brilliant when it does it, but who can pay for that? Who has that capability? The thing we're gonna find out? Yeah, it's gonna be Google, Microsoft. That's why Microsoft owns a big hunk of OpenAI. OpenAI is gonna be for-profit or not for-profit. Now it's a for-profit company and Microsoft has a piece of it. 'Cause only those companies are gonna be able to engage in this level of data investment. And eventually, if we have to pay for it, that it's even gonna be more expensive and only certain people will have that capability. Now that's gonna be something we'll have to think about.

A. J. Kierstead: Do you think this is, so it seems like you have the opinion this is a baby step towards the really big steps. Like this is something that's gonna be like, you imagine how much Google, for example, has changed over the last, what, 15 years, 20 years, however long? 20 years probably it's been around. I mean is it kind of similar like that we're talking a while before it really comes to what we're envisioning in our head. Like we always, I feel like people always think like this is the future. This is exactly what we have right now, is the future when it almost never is exactly that. It's always some different variation of...

Bill Murphy: I think you're right. Like a lot of people say, "oh, what happened with the first couple other generations of AI? Well some of 'em, let's say like machine learning really did take off. Most cars are made with robot, you know, welders. You go to a food factory, nobody's in it. You know, I like that idea. You know, it's like it is being used. It's just not being used the way people thought it might be used. I think these human interaction elements of our society are the ones that are the most difficult. But it does have the possibility in the law, let's say of I need to know what my rights are about something simple. And you know, the cheapest lawyer you can get is hundreds of dollars an hour or else you're gonna go to some pro bono clinic or something like that and hope that you can stand in line.

Bill Murphy: This may actually open up law that could be okay, but I want somebody else to vet that. Like a law school could vet that. Okay make sure this is the advice, the general advice this thing gets is okay, just like we've gotta test the cars that are using AI to drive around. You know, we gotta make sure that they've got this figured out and maybe, okay, we have to keep 'em in a little forest that only is this wide and they know all the roads, can't let 'em go in the snow. We can't let 'em go in to Oregon. They have to stay in San Francisco. Okay, maybe that's what we have to do for a while to make sure that it's not trying to be something it's not. But I do think it has the possibility of helping out a lot.

A. J. Kierstead: Yeah, it seems like we always talk about with the court system especially that there's these demands that the court system has an almost impossible time of being able to complete the, like backlogs, things like that, the immigration system, everything going on what's getting people processed through the system. Like this could, like down the road, obviously, but any, it, I don't see technologies like this destroying any field essentially. Like it's only gonna make it possibly easier and more cost effective, be able to have, these industries react quicker and more efficiently.

Bill Murphy: Well, I mean, I think that things will be different. I think that if I were a court stenographer that was typing on that machine, I'd be worried because speech recognition in the last 10 years has really gotten good. I use it now when I give feedback for exams and I just talk. I use it when I'm driving around. I wanna send a text message to somebody hands free, no problem. And it's pretty good. And I've noticed it's gotten better, even the last five years. So that's, this AI has done this. Now, has that changed people's jobs? Yeah, but it's like we used to have switchboard operators. I'm not sure it's a bad thing that as long as we make sure we understand that people are gonna have to move out and we should be warning people. Technology's coming down your pike.

Bill Murphy: You're not gonna be a lawyer that has to learn how to shepardize cases. They're gonna automatically do that. When I was in law school, you had to learn how to shepardize a case, which meant how do you trace its history? Is it been overruled or is this case limiting that whatever's going on? And it was very mechanical and it was done, in a book called Shepherds [chuckle] and we had to learn how to use that book. Now it's just a brand name for an automatic system that goes and looks up the later treatment. And nobody's going, "oh, let's bring back that old thing." The other thing, it's like all people now have access to the law, all the cases. So a little law firm is powerful as what big law firms used to be. 'Cause their major magic weapon was they had a big library. Now the information's available to all of you. I think that's a good equalizing thing.

[music]

A. J. Kierstead: Professor Bill Murphy, thank you so much for joining me for the show.

Bill Murphy: Thank you A. J.

A. J. Kierstead: Thanks for listening to The Legal Impact presented by UNH Franklin Pierce School of Law. To help spread word about the show, please be sure to subscribe and comment on your favorite podcast platform, including Apple Podcasts, Google Podcasts, and Spotify. Get the back episodes of the show and podcast links at law.unh.edu/podcast.